Enforcing Azure AD / Entra ID on Azure OpenAI

A technical guide on enforcing Azure AD / Entra ID authentication for Azure OpenAI, including scripts to disable local auth and verify the changes.

For those of us who have migrated to the better security practice of using Entra ID with Azure OpenAI, it's a good idea to block static API keys from being used whenever possible.

Matt Felton's blog post was an extremely helpful starting point. The ideal situation would be to set disableLocalAuth (blocking API keys) on the entire resource group, but that can be a bit heavy-handed if you have some legacy applications that may need to access select resources in the group. So, we decided to set the policy on each resource.

The thing is, we have several dozen Azure OpenAI resources. Doing them each individually would be time consuming, so we wrote a quick bash script to help set disableLocalAuth on each resource.

#!/usr/bin/env bash

# Fetch all Cognitive Services accounts

resources=$(az resource list --resource-type "Microsoft.CognitiveServices/accounts" --query "[?kind=='OpenAI']")

# Loop through each resource

echo "$resources" | jq -c '.[]' | while read -r resource; do

# Extract the ID of the resource

id=$(echo "$resource" | jq -r '.id')

# Prepare the body for the PATCH request

body=$(cat <<EOF

{

"properties": {

"disableLocalAuth": true

}

}

EOF

)

# Construct the URI for the PATCH request

uri="https://management.azure.com${id}?api-version=2021-10-01"

# Execute the PATCH request

az rest --method patch --uri "$uri" --body "$body"

echo "Patched resource: $id"

doneScript to set disableLocalAuth on all existing Azure OpenAI resources

After running the script above, we should check after a few minutes to make sure it actually worked. Grab some tea.

To verify our script worked, let's try using a valid API key. We want (and expect) this to be blocked now.

export AZURE_OPENAI_API_KEY="YOUR_REAL_KEY"

export AZURE_OPENAI_ENDPOINT="https://YOUR_ENDPOINT.openai.azure.com"

curl -v "$AZURE_OPENAI_ENDPOINT/openai/deployments/YOUR_DEPLOYMENT_NAME/chat/completions?api-version=2024-06-01" \

-H "Content-Type: application/json" \

-H "api-key: $AZURE_OPENAI_API_KEY" \

-H "x-ms-client-request-id: auth-tests-bad-$RANDOM" \

-d '{"messages":[{"role": "user", "content": "Hello."}], "max_tokens": 1, "temperature": 0.0, "seed": 42}'Attempt to use disallowed API keys

{

"error": {

"code": "AuthenticationTypeDisabled",

"message": "Key based authentication is disabled for this resource."

}

}Expected failure when using API keys

Looks good! To prove to ourselves that we weren't just mistyping the api-key value, we will attempt to use a fake API key to see if we get a different error message.

curl -v "$AZURE_OPENAI_ENDPOINT/openai/deployments/YOUR_DEPLOYMENT/chat/completions?api-version=2024-06-01" \

-H "Content-Type: application/json" \

-H "api-key: totally-fake" \

-H "x-ms-client-request-id: auth-tests-really-bad-$RANDOM" \

-d '{"messages":[{"role": "user", "content": "Hello."}], "max_tokens": 1, "temperature": 0.0, "seed": 42}'Attempt to use completely bogus API key as a sanity check

{

"error": {

"code": "401",

"message": "Access denied due to invalid subscription key or wrong API endpoint. Make sure to provide a valid key for an active subscription and use a correct regional API endpoint for your resource."

}

}Expected failure when using a fake API key, proving our previous result is correct

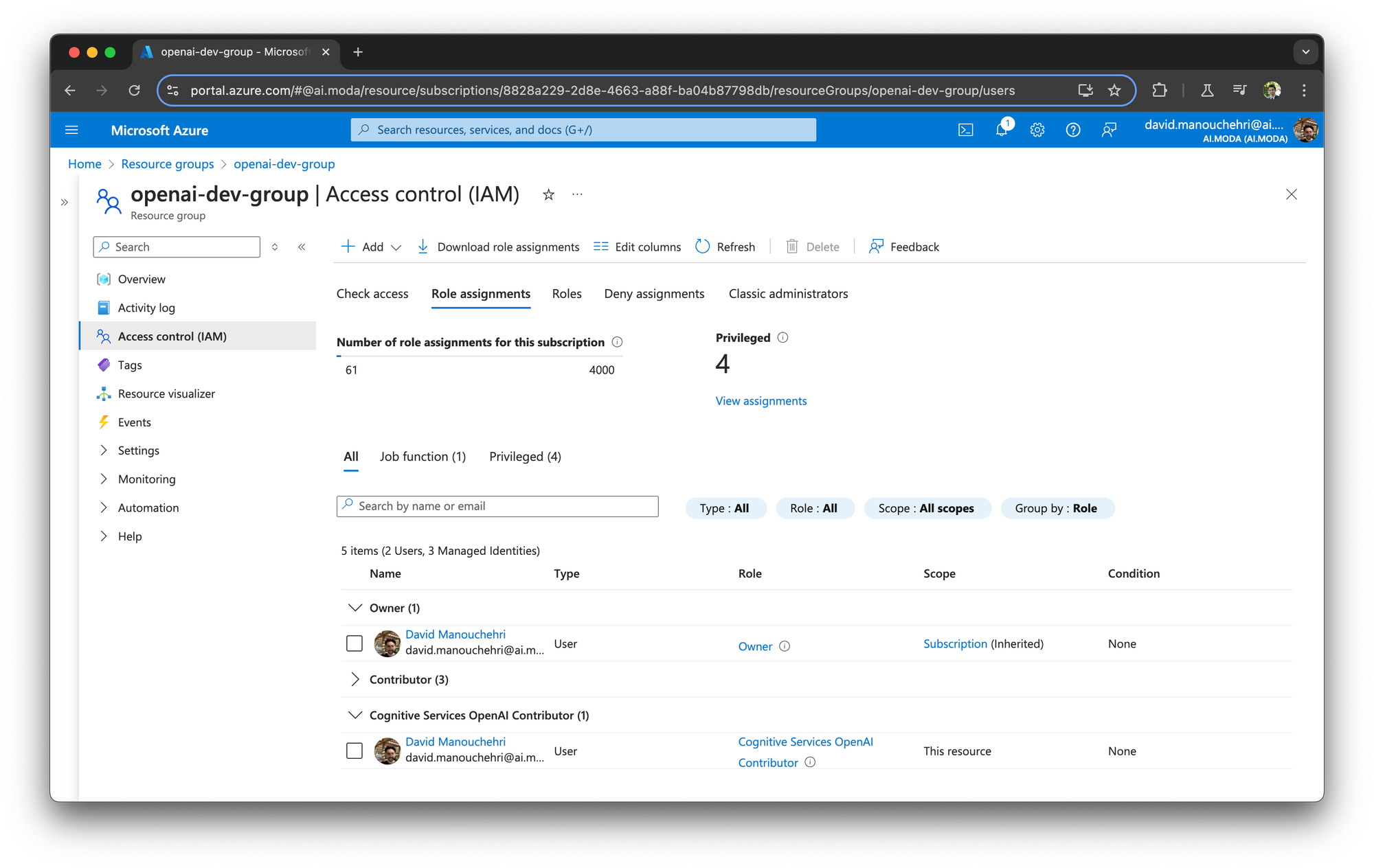

Awesome! Now, to test out Entra ID, you'll first need to add yourself to the resource group as a Cognitive Services OpenAI Contributor (or similar). (Matt's blog post pointed this out as well, but we discovered that adding yourself in just the resource group is enough; no need to do it on each individual resource.)

Cognitive Services OpenAI Contributor! Being the Owner isn't enough.Our generated access tokens from get-access-token should have permission now! Time to test it out.

export AZURE_OPENAI_AD_TOKEN=$(az account get-access-token --scope "https://cognitiveservices.azure.com/.default" --query accessToken --output tsv)

curl -v "$AZURE_OPENAI_ENDPOINT/openai/deployments/YOUR_DEPLOYMENT_NAME/chat/completions?api-version=2024-06-01" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer $AZURE_OPENAI_AD_TOKEN" \

-H "x-ms-client-request-id: auth-tests-good-$RANDOM" \

-d '{"messages":[{"role": "user", "content": "Hello."}], "max_tokens": 1, "temperature": 0.0, "seed": 42}'Using Entra ID with AOAI

{

"choices": [

{

"finish_reason": "length",

"index": 0,

"logprobs": null,

"message": {

"content": "Hello",

"role": "assistant"

}

}

],

"created": 1721926679,

"id": "chatcmpl-9ow4tzxpfzkuN9EtOcNu2IcjUGyl2",

"model": "gpt-35-turbo",

"object": "chat.completion",

"system_fingerprint": "fp_811936bd4f",

"usage": {

"completion_tokens": 1,

"prompt_tokens": 9,

"total_tokens": 10

}

}Success with Entra ID!

That's it! If you do not need to do any further testing, we would recommend you remove yourself from the Cognitive Services OpenAI Contributor role.