Identifying Anthropic Claude Errors on Amazon Bedrock

Learn how to identify and troubleshoot errors with Claude 3.5 Sonnet on Amazon Bedrock using CloudWatch and CloudTrail.

So you're using Claude 3.5 Sonnet on Amazon Bedrock, excellent choice! AWS has the best privacy policy I've seen so far for Anthropic models, and won't store any prompts for human review (ever).

A common question is, how reliable is this compared to Anthropic's API or Google Vertex AI? You can set up Langfuse or Cloudflare AI Gateway (we recommend both), but AWS has a built in solution for us:

CloudWatch and CloudTrail!

Neither require enabling anything beyond what's already on by default.

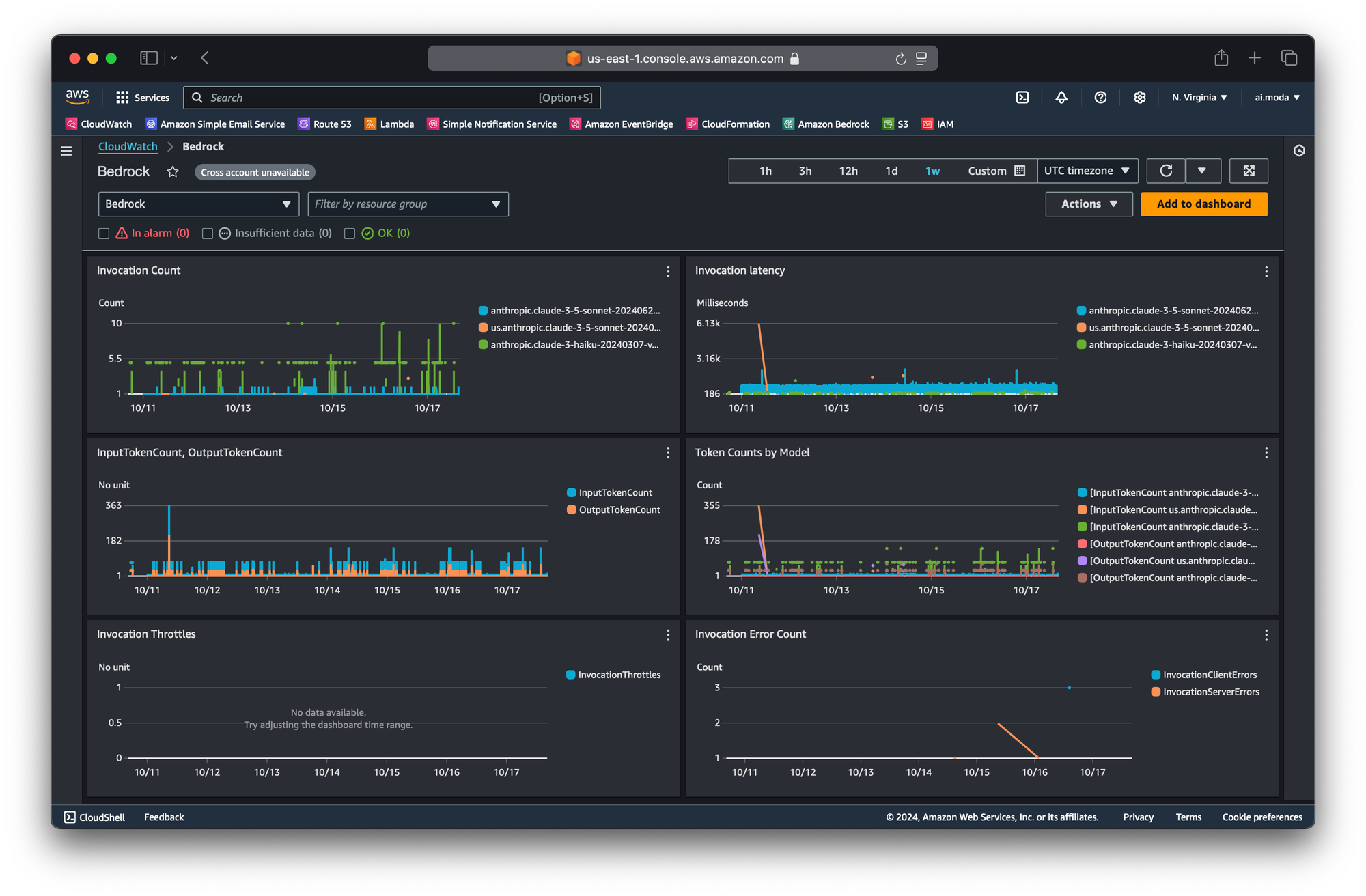

Let's go over using CloudWatch first. AWS has already created a handy default dashboard for us, which you can find here.

That's it! You can see we've only had three InvocationServerErrors in the past week in us-east-1.

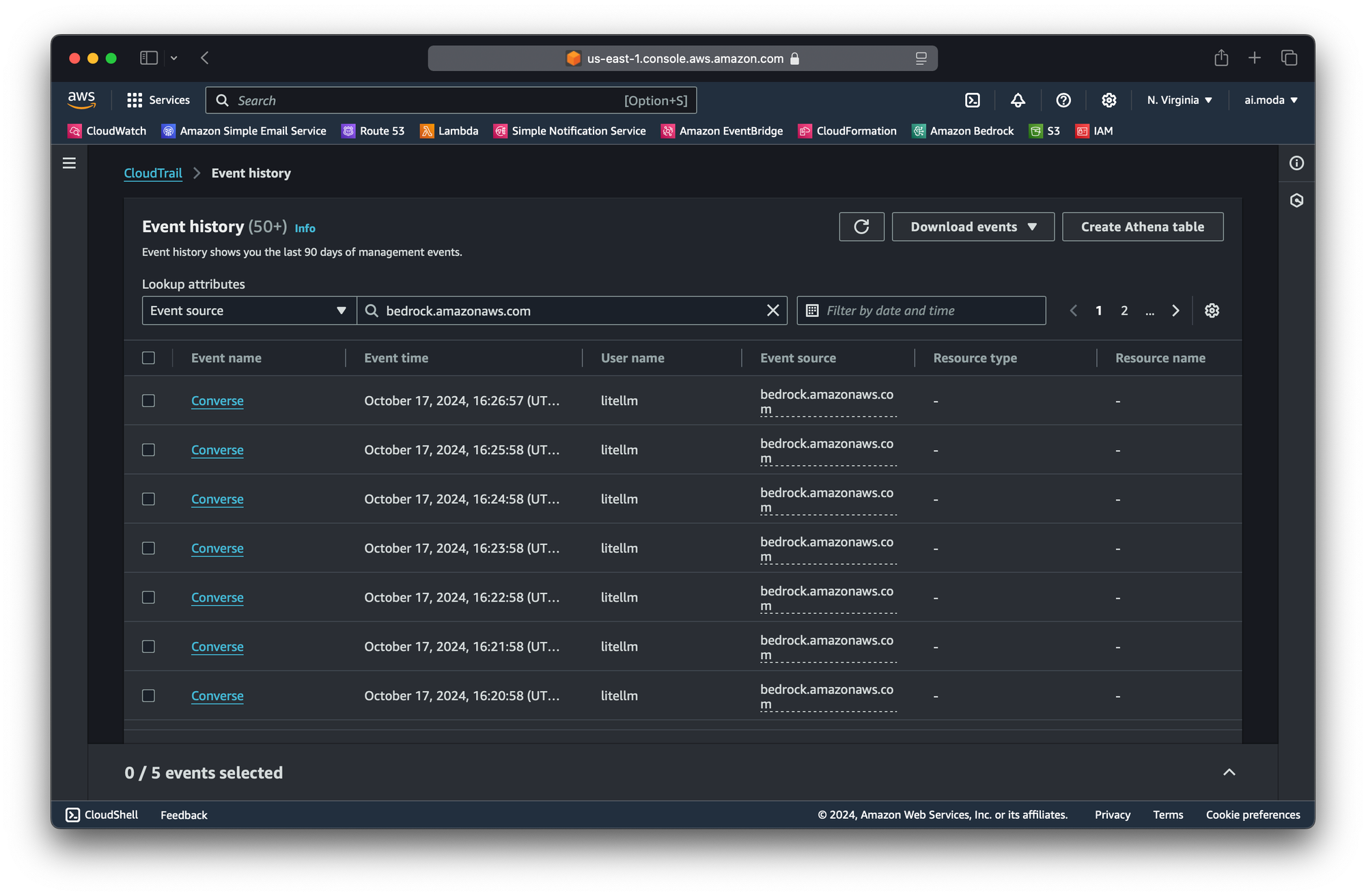

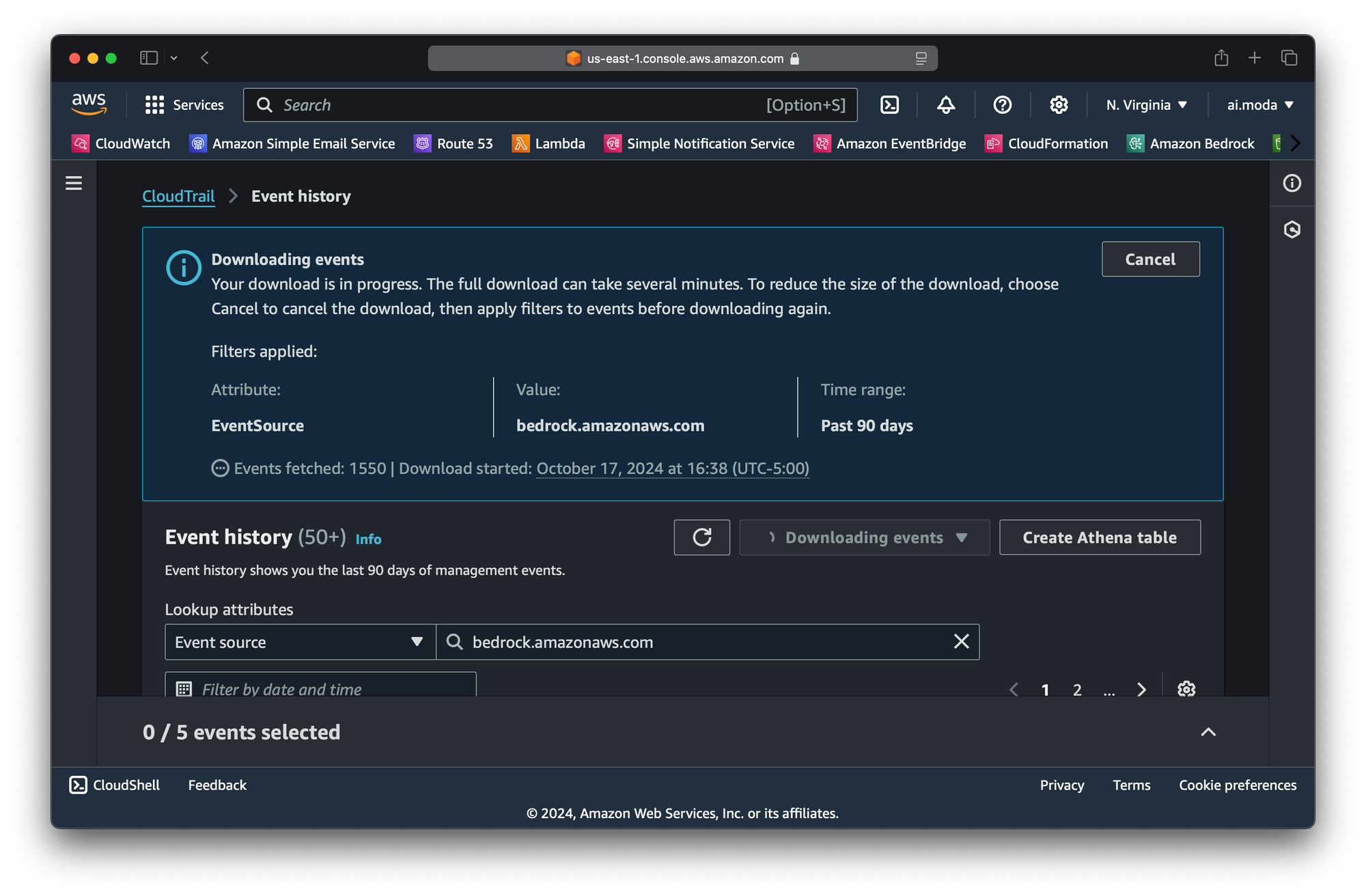

Moving on to CloudTrail, we just need to filter the Event source by bedrock.amazonaws.com to see all the Bedrock calls.

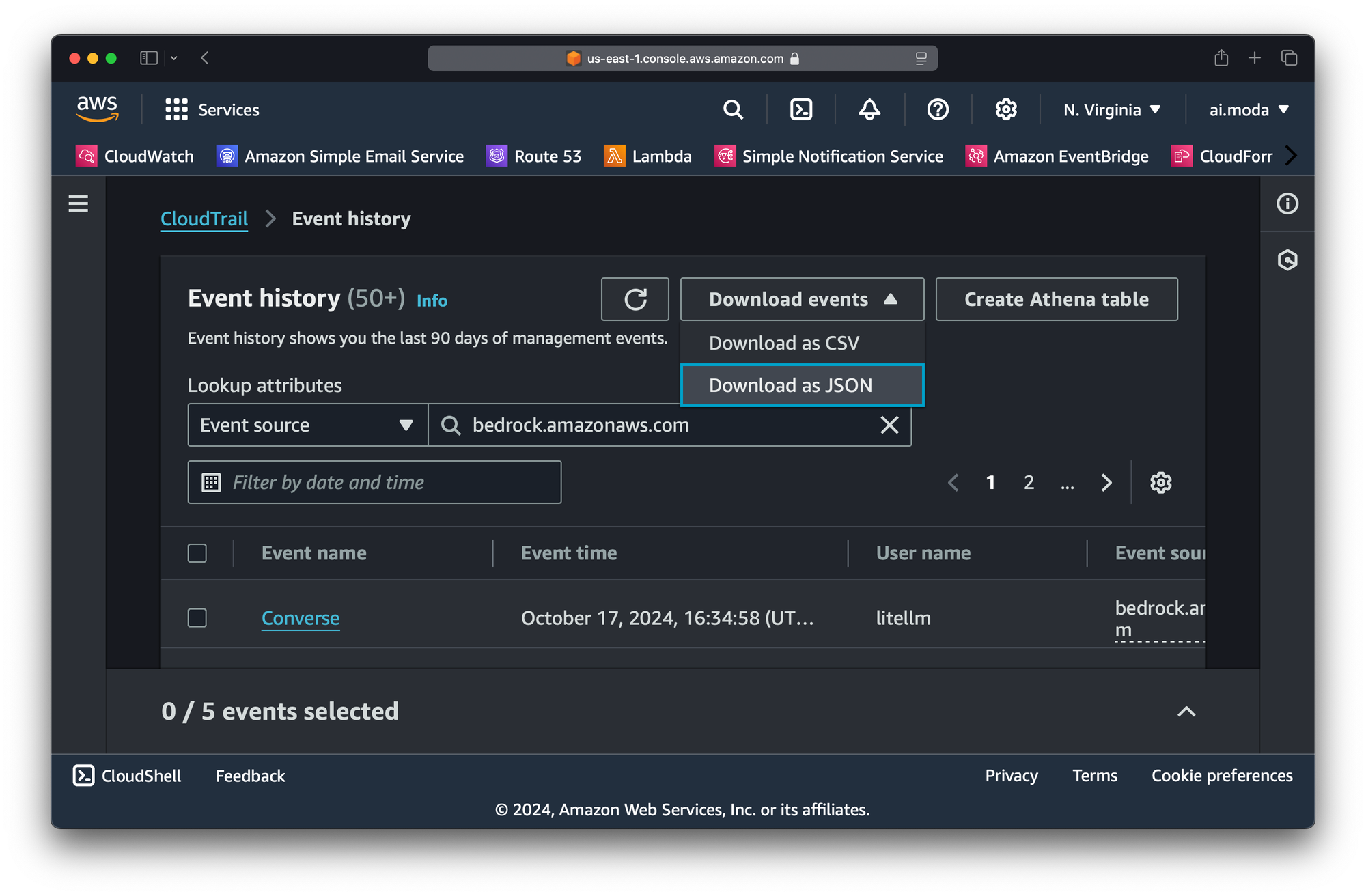

Next, click Download events and Download as JSON.

You'll now wait a few minutes for all the Amazon Bedrock CloudTrail events from the past 90 days to be downloaded.

Once the filter has downloaded, we can filter for all events that used Claude 3.5 Sonnet!

jq '.Records[] | select(.requestParameters.modelId == "anthropic.claude-3-5-sonnet-20240620-v1:0")' event_history.jsonWow, that's.. a lot. Let's do a count instead.

jq '[.Records[] | select(.requestParameters.modelId == "anthropic.claude-3-5-sonnet-20240620-v1:0")] | length' event_history.jsonWe have a total of 9,782 requests in here. Now, how about details on errors?

jq '.Records[] | select(.requestParameters.modelId == "anthropic.claude-3-5-sonnet-20240620-v1:0" and (.errorCode != null or .errorMessage != null))' event_history.jsonFinally, let's do a count instead.

jq '[.Records[] | select(.requestParameters.modelId == "anthropic.claude-3-5-sonnet-20240620-v1:0" and (.errorCode != null or .errorMessage != null))] | length' event_history.jsonWe get a mere 4! So our total error rate is a tiny 0.04%.

If we look at us.anthropic.claude-3-5-sonnet-20240620-v1:0, the picture isn't as pretty. We see 318 errors on 12,359 requests, for a total error rate of 2.57%. (This was due an outage for the cross-region Claude 3.5 Sonnet profile in us-east-1, which lasted several hours on October 3-4, 2024.) This should highlight the need for adding failovers in your code, even if you're using cross-region profiles (which can, and have, failed).