Using Regional Google Storage Buckets with S3 APIs

Learn how to use regional GCS buckets with S3 API compatibility for data sovereignty and compliance. This guide covers creating a bucket, setting permissions, and using HMAC for S3 API access. Test your setup with rclone and create presigned URLs for secure file sharing.

For organizations prioritizing data sovereignty and compliance, regional Google Storage buckets with S3 API compatibility offer a great solution. This blog post will give a quick guide on how you can start using them.

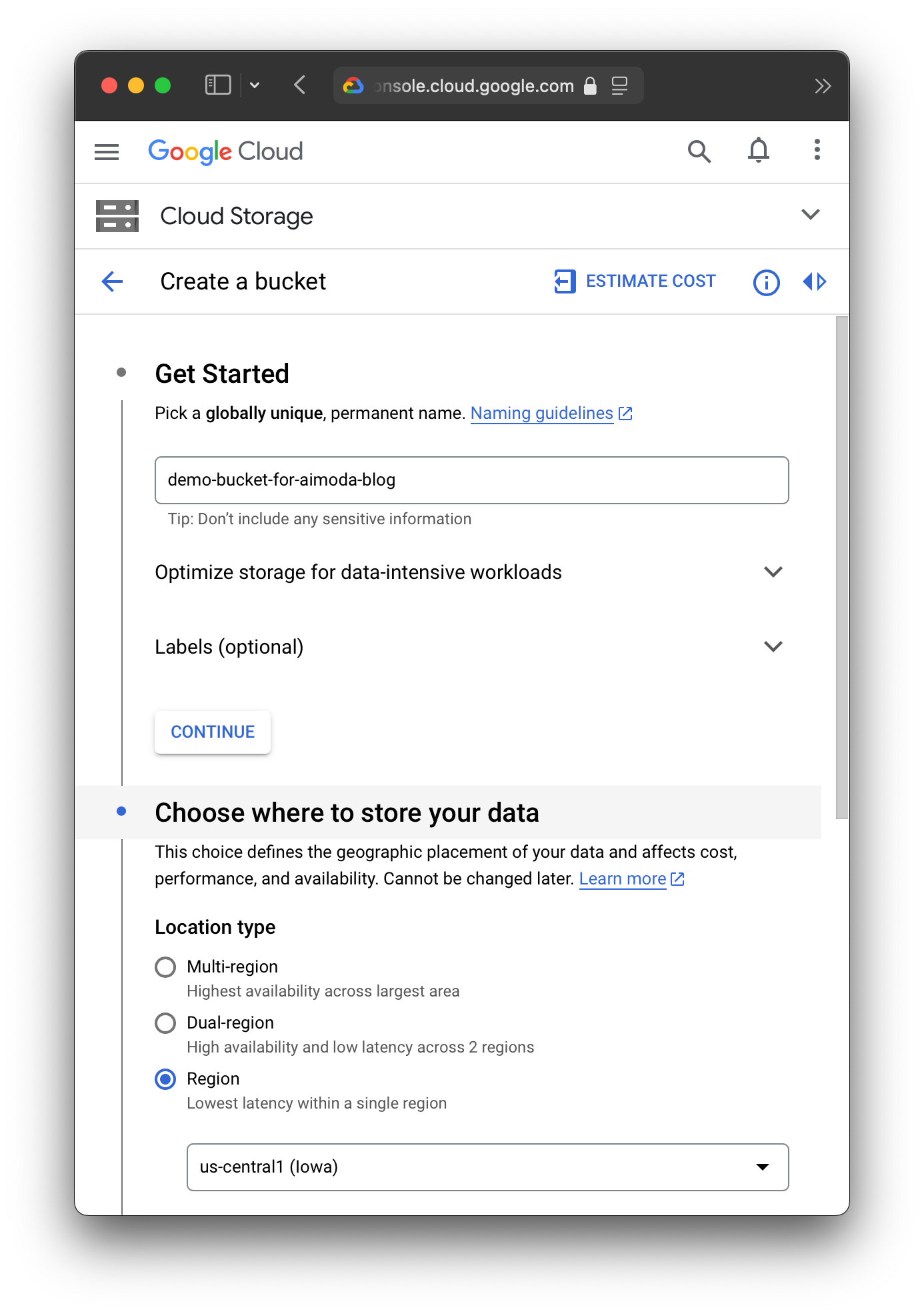

First, create a bucket where there's a supported regional endpoint. We'll use us-central1 for our example.

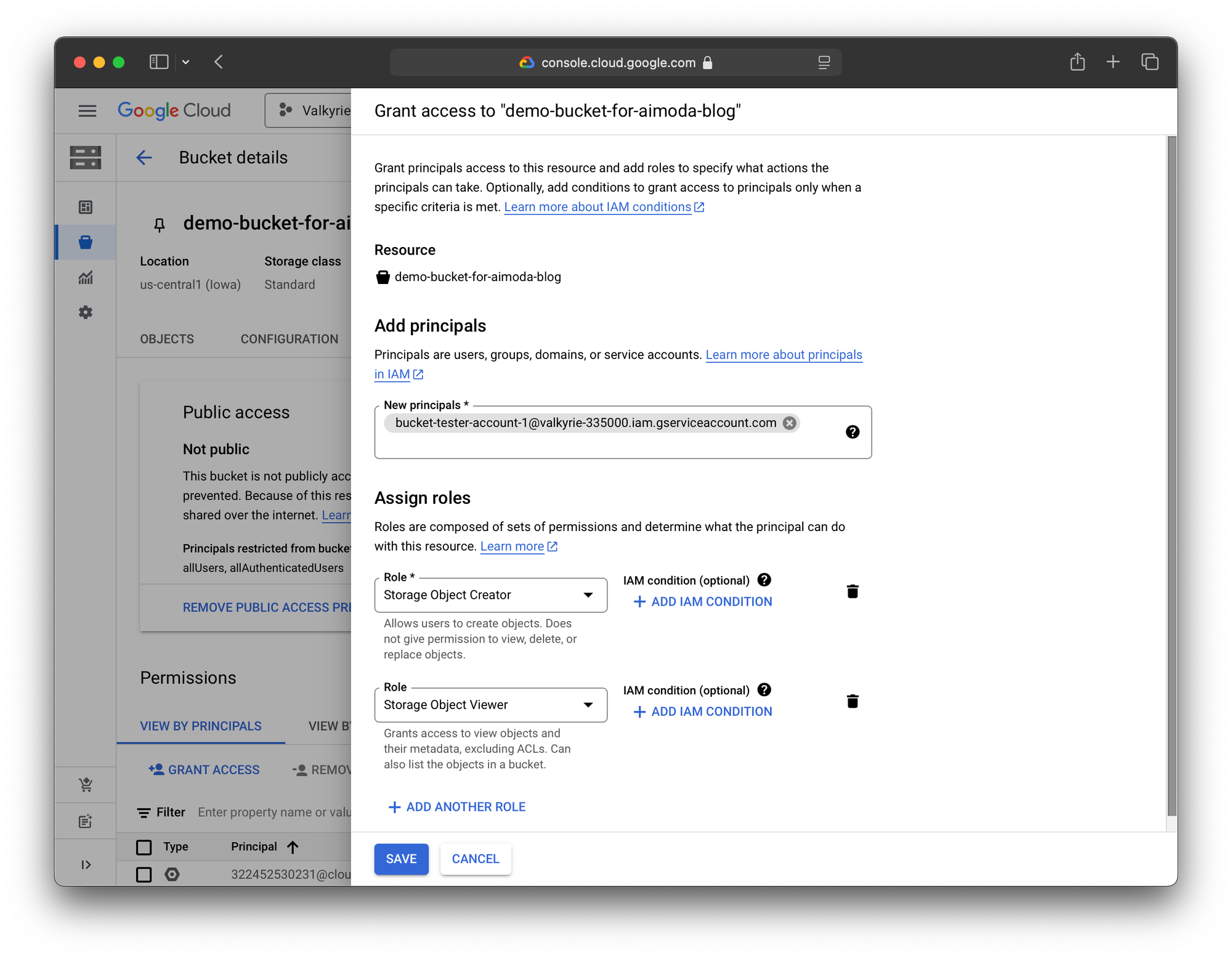

Next, give a service account the appropriate permissions. In our case, we'll give it access to read and write files. (If we were using this service account to only share existing files, then only Storage Object Viewer would be the correct and more secure setup.)

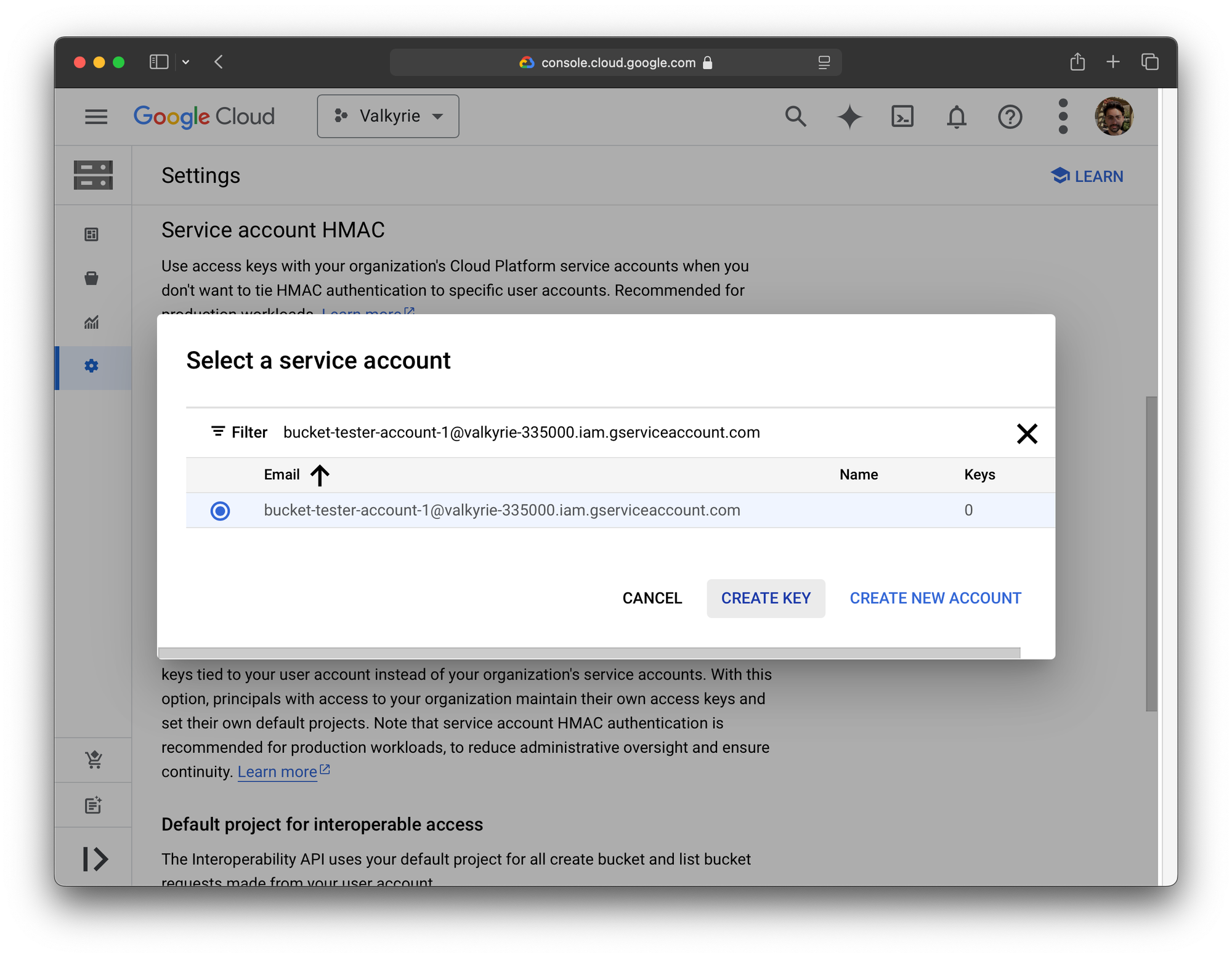

And finally, create a service account HMAC. This will allow us to use standard S3 API calls in any application that supports S3 (which is a lot).

That's it! To test out our new bucket, we will use rclone.

[gcs-testing-1]

type = s3

provider = Other

force_path_style = true

region = auto

access_key_id = GOOG1EABCDEFGHIJKLMNOPQRSTUVWXYZABCDEFGHIJKLMNOPQRSTUVWXYZ123

secret_access_key = ZNNdQr7q3VSJZM59JDuGQQdhM8BdEWs7yRl+gWfJCsbC

endpoint = https://storage.us-central1.rep.googleapis.com~/.config/rclone/rclone.conf

We'll copy in a small text file as a test.

rclone copy --progress --header-upload='Cache-Control: public, immutable, max-age=31536000, s-maxage=31536000' --header-upload='Content-Language: en' test.1.txt gcs-testing-1:demo-bucket-for-aimoda-blog/And use the handy rclone link to make a presigned URL.

rclone link gcs-testing-1:demo-bucket-for-aimoda-blog/test.1.txtWhich will print out a URL that looks similar to the one below. (Note: this URL won't work when the blog post is published, it's just for demo purposes.)

https://storage.us-central1.rep.googleapis.com/demo-bucket-for-aimoda-blog/test.1.txt?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=GOOG1E4OBXPBRG23OA4WVVDBG4624N4AEJWRK5BP5VKMUIKHTL6G7K2H2VRPQ%2F20241011%2Fauto%2Fs3%2Faws4_request&X-Amz-Date=20241011T171330Z&X-Amz-Expires=604800&X-Amz-SignedHeaders=host&x-id=GetObject&X-Amz-Signature=6abde5e3f89d5a1a71ad8a74d78a1133598aee7e9b59c2fbd9880ccea0483ff9Example presigned URL.

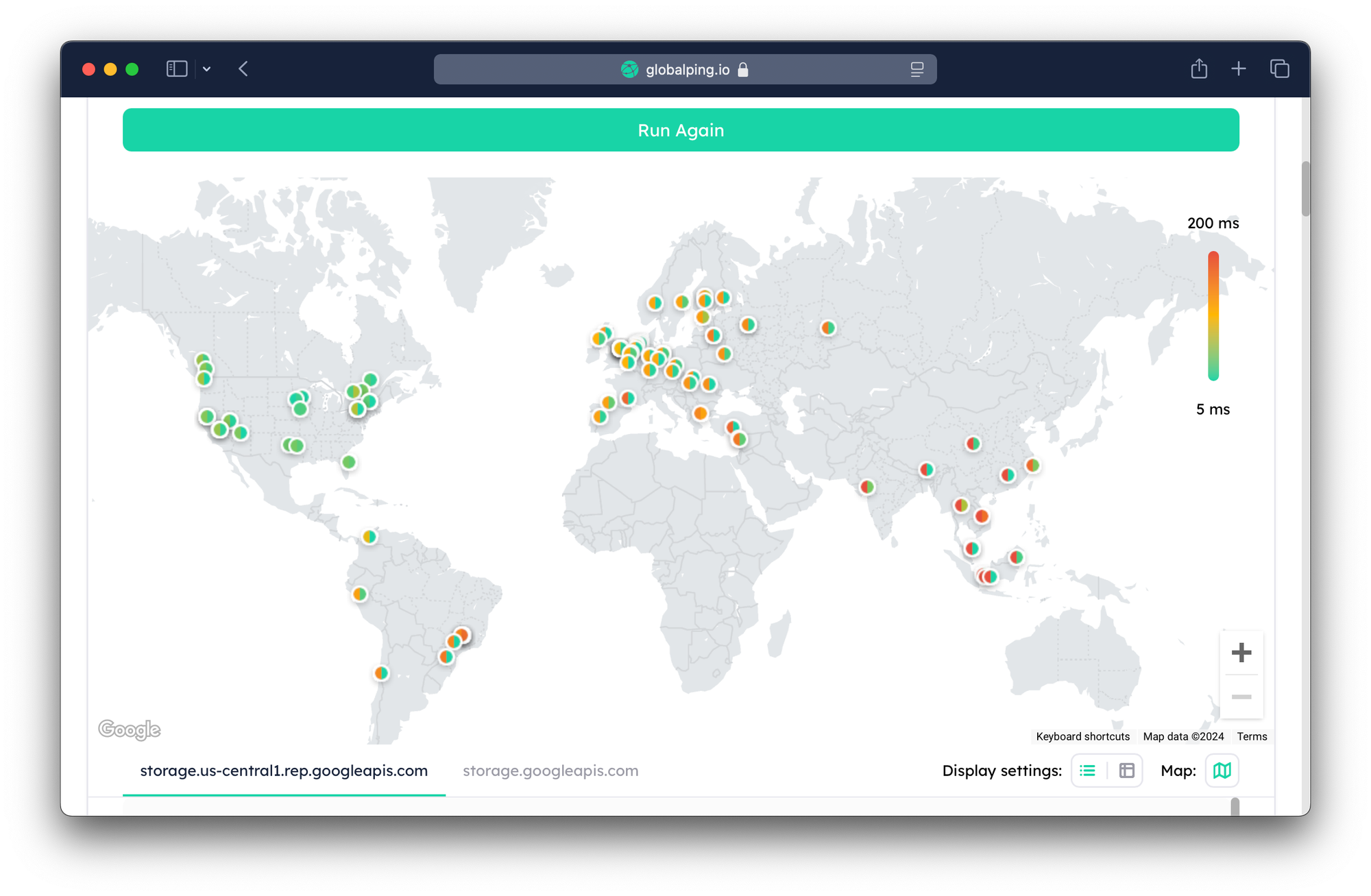

You can tell this endpoint is definitely in us-central1 by comparing the latency with the standard storage.googleapis.com endpoint. (Thanks to Globalping!)